Are you looking for ways to evaluate training effectiveness of a program or course? If so, you may already know that there are a number of approaches you can take. In this post, we’ll look at three of the most widely used methods of evaluating training effectiveness and how to use them.

The three approaches are:

-

The Kirkpatrick Taxonomy

-

The Phillips ROI Model

-

The CIPP Evaluation Model

1. The Kirkpatrick Taxonomy

The Kirkpatrick Taxonomy is perhaps the most widely used method of evaluating training effectiveness. Developed by Don Kirkpatrick in the 1950s, this framework offers a four-level strategy that anyone can use to evaluate the effectiveness of any training course or program.

The four levels are:

- Level 1: Reaction

- Level 2: Learning

- Level 3: Behavior

- Level 4: Results

Here’s how each level works:

Level 1: Reaction

At this level, you gauge how the participants reacted or responded to the training. Asking the participants to complete a short survey will help you identify whether the conditions for learning were present.

Level 2: Learning

The second stage is to gauge what the participants learned from the training. Most commonly, short quizzes or practical tests are used to assess this; one before the training, and one afterward.

Level 3: Behavior

The third stage takes place a while after the training. Using various assessment methods, you try to assess whether the course participants put what they learned into practice on-the-job. To assess this, you may ask participants to complete self-assessments or ask their supervisor to formally assess them.

Level 4: Results

Lastly, you need to evaluate whether the training met the stakeholders’ expectations. In most companies or organizations, the stakeholders are usually the management or executives who decided to implement the training in the first place. The goal is to determine the return on these expectations, known as ROE (Return on Expectations).

Limitations of the Kirkpatrick Taxonomy

Anecdotally, most people have heard of Kirkpatrick and fall into one of two camps:

-

You may use the term ‘Kirkpatrick’ but not follow the full taxonomy. Your organization may not require you to use anything more than simple ‘Smile’ feedback sheets after each training session.

-

You may have read and agreed with the taxonomy, but have little idea about how to apply it beyond basic Level One feedback forms given out after training.

Whichever camp you fall into, there are undeniably some limitations to the Kirkpatrick taxonomy. For starters, the Kirkpatrick taxonomy is often referred to as a ‘model’ or ‘theory’ when in reality it’s largely ascientific.

A more stinging criticism is that the Kirkpatrick approach gathers little data that helps actually improve training. For example, if a watchmaker runs a training program designed to decrease customer wait times for repairs, yet the wait times don’t decrease afterward, the Kirkpatrick taxonomy only tells us that the training ‘didn’t work’; it doesn’t help to improve the training.

The main limitation, and most common criticism of the Kirkpatrick taxonomy, is that there’s little evidence to support the idea of linear causality – ie. a favorable learning response at level 1 will result in better learning outcomes at Level 2, or improved on-the-job performances in level 3. Research has found no causal link between the first two levels, even though the Kirkpatrick’s presentation as a taxonomy suggests that there would be.

The fourth main limitation of the Kirkpatrick model is that it measures learning in terms of return on stakeholder expectations (ROE). Some firms are looking for a traditional return on investment evaluation where the cost of the training is set against the benefits that it delivered for the company.

How to use the Kirkpatrick taxonomy effectively

In fairness to Don Kirkpatrick, he addressed many of the limitations laid out above. For accuracy, he suggests working backwards through his four levels during the design phase of any training program. This approach helps ensure that an organization decides which outcomes it wants to address first, and then designs or develops the training accordingly.

This approach was pioneered by the late Grant Wiggins and Jay McTighe in their book, Understanding by Design (UbD®). The UbD® framework is used by educators across the world when designing courses and content units. The Kirkpatrick taxonomy is best applied in this fashion, so that the stakeholders or management begin with the outcomes in mind.

Here’s how to apply the Kirkpatrick model effectively according to the UbD® principles:

-

Decide what business results you are targeting; ie. the results.

-

Determine whether the training matches the stakeholder’s expectations.

-

Identify what on-the-job behavior or performance changes you would need to look for to prove that the trainees had met the end results.

-

Define the learning objectives that will develop the on-the-job behavior.

-

Decide how to deliver the necessary instruction in an engaging and appealing way.

As you can see, the UbD® framework is a helpful way of implementing the Kirkpatrick taxonomy. Decide what results you want to see first, and then plan what you’ll need to include in the training in order to get there.

2. The Phillips ROI Methodology

The second method for evaluating training effectiveness that we’ll discuss is the Phillips ROI Methodology. When Jack Phillips published his own work on training evaluation in 1980, the Kirkpatrick taxonomy was already well established as the dominant training evaluation model. However, Phillips wanted to address several of the shortcomings he saw in the Kirkpatrick taxonomy. His ROI methodology is best thought of as an expanded version of Kirkpatrick’s taxonomy.

The Phillips ROI Methodology has five levels:

Level 1: Reaction

In common with the Kirkpatrick taxonomy, the Phillips methodology evaluates the participants’ reaction.

Level 2: Learning

The second level evaluates whether learning took place.

Level 3: Application and Implementation

The original Kirkpatrick taxonomy evaluated behavior in the workplace to see whether the learning translated into on-the-job training. Phillips expanded this level to cover both application and implementation. This addresses one of the central criticisms of the Kirkpatrick taxonomy: that it doesn’t gather enough data to help improve training.

Phillips’ methodology makes it far easier to see why training does or doesn’t translate into workplace changes. If there is a problem, did it lie with the application or the implementation? For example, was the learning applied incorrectly? Or was the on-the-job training implemented ineffectively?

Level 4: Impact

While the fourth level of the Kirkpatrick taxonomy focuses purely on results, Phillips’ methodology is much broader. His level 4 – Impact – helps identify whether factors other than training were responsible for delivering the outcomes.

Level 5: Return on investment (ROI)

Unlike the Kirkpatrick taxonomy that simply measures training results again stakeholder expectations (ROE), Phillips’ methodology contains a fifth level specifically for measuring ‘return on investment’, ROI. This level uses cost-benefit analysis to determine the value of training programs. It helps companies measure whether the money they invested in the training has produced measurable results.

Here’s how it works:

You gather business data from before, during and after the training and look for quantifiable factors, such as process improvements, productivity improvements, or increased profits, depending on the nature of the training. You then compare the cost of the training with the value that it provided. This gives you an indication of the value of the training to the company’s bottom line.

3. The CIPP evaluation model

The third approach to evaluating training effectiveness that we’ll discuss is the CIPP model, developed in the 1960s by Daniel Stufflebeam. Often referred to as the Stufflebeam model, CIPP is an acronym for the following four areas of evaluation:

-

Context

-

Input

-

Process

-

Product

CIPP evaluates these areas when judging the value of a program. Unlike the Kirkpatrick Model and the Phillips ROI Methodology, CIPP is less about proving what you did and more about improving what you’re doing. Arguably, this makes it more useful for businesses and organizations.

The CIPP model was later expanded to include Sustainability, Effectiveness, and Transportability. It offers a decision-centered approach to the evaluation of programs.

CIPP model: How it works

The CIPP model aims to link evaluation with the decision-making that goes into running a training course or program. Each of the above four aspects of evaluation – context, input, process, and product – are used provide an analytic basis for making the decisions that go into a program. These decisions can be groups into five main areas:

-

Planning

-

Structuring

-

Implementing

-

Reviewing

-

Revising

By examining each of these areas through the four aspects of evaluations, the decision-makers behind any training course have a logical framework for making decisions. The CIPP model makes it easier for businesses to answer four main questions:

1. What do we need to do?

This involves looking at the concerns, needs, attitudes and perceptions of the business and involves collecting and analyzing assessment data. This process helps businesses decide upon their most pressing goals. In other words, which area(s) do they need to prioritize? What should their objectives be?

2. How should we approach training?

The next step is to begin researching successful training materials or programs to decide on the best approach. Should you hire external experts to deliver the training? Or develop your own in-house approach? This step helps identify the right resources that a company needs to meet its objectives, and the steps they’ll need to take to get there.

3. Are we on the right track?

This stage involves continually monitoring the training program and fine-tuning its direction. By looking at factors such as the delivery, staff morale, arising conflicts and the results of testing data, businesses can see how well the training is being implemented. This stage helps identify problems as they arise, giving the training providers time to fix or address any concerns.

4. How successful was the program?

The final stage is to measure the outcomes of the training and compare them to the expected outcomes. This helps businesses determine the value of the training. Should it be continued? Modified? Or discontinued?

How CIPP works in the different stages of evaluation

Unlike the Kirkpatrick taxonomy or the Phillips ROI methodology, the CIPP model lets stakeholders or decision-makers evaluate a training program before, during and after it has finished. This provides an opportunity to tailor the training to the specific needs of the participants, fine-tune it while it is being implemented, and assess its impact after it wraps up.

The four aspects of the evaluation – context, input, process, and product – can be applied as both formative evaluations (before the training) and summative assessments (after the training).

Using CIPP as formative assessment

To use the four areas of evaluation before the program, these are the types of questions you’d ask:

Context: What do we need to do?

Input: How should we approach training?

Process: Are we on the right track?

Product: Does this program have a successful track record?

These questions help improve the quality of the training provided and ensure that the stakeholders’ goals are met.

Using CIPP as summative assessment

CIPP can also be used as a form of summative assessment, to identify what went right and wrong in a training course or program. Here are some questions you may ask:

Context: Did the training address our needs?

Input: Was the training well designed?

Process: Did the training stay on the right track? Why? Why not?

Product: How successful was the program in meeting our goals?

The CIPP model is a helpful way of determining the right type of training that a business or organization needs and how best to implement and monitor it.

To learn more about the CIPP model, check out Michigan University’s evaluation center. They have checklists and an online bibliography of literature related to the CIPP model.

Conclusion

As you’ve seen, the three most widely used training evaluation models – the Kirkpatrick taxonomy, the Phillips’ ROI Methodology, and the Stufflebeam Model – all have their own unique advantages and disadvantages. Finding the right one for your organization will depend on your budget and the time and resources you have available.

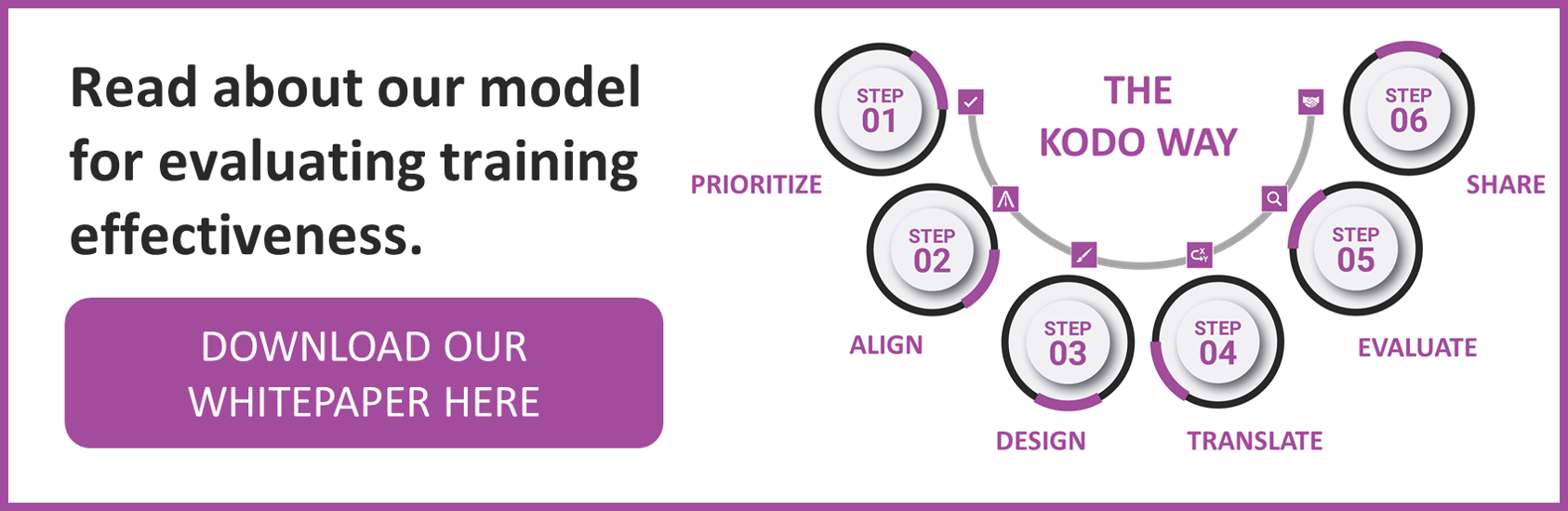

If you’re keen to find ways of evaluating the effectiveness of your course, download our free white paper here.

Related posts: